Indeed just unveiled a new way to make job hunting less frustrating

The recruitment platform is the latest in a long line of companies exploring integrations of generative AI.

Fast Facts

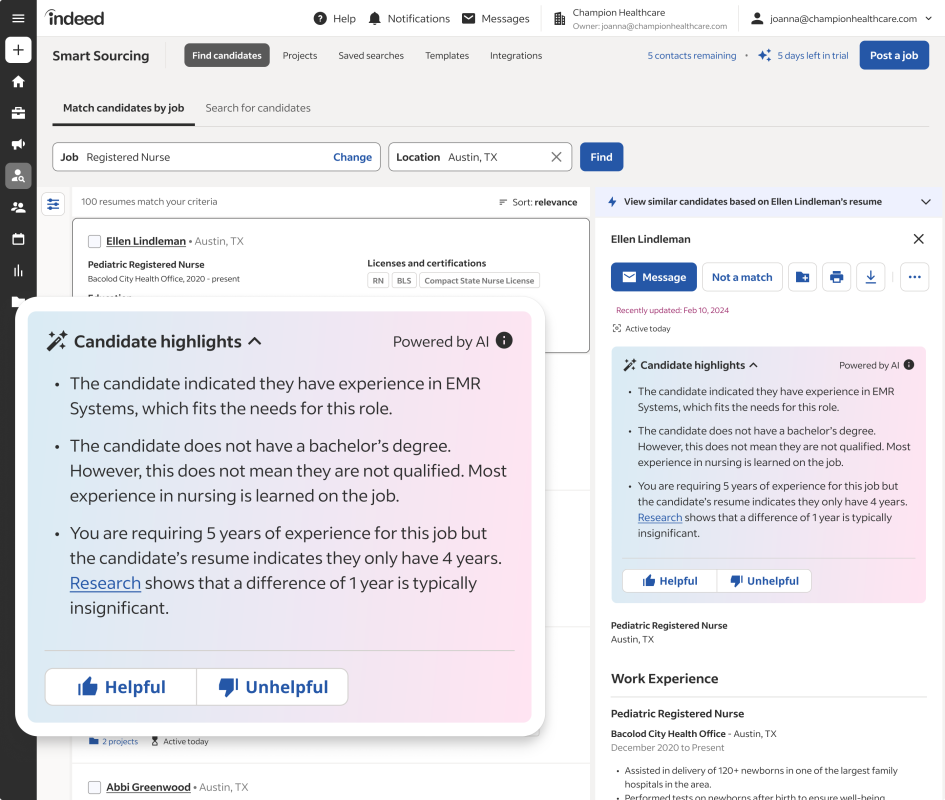

- Indeed Tuesday launched Smart Sourcing, an AI-powered product suite.

- The intention is to quickly and efficiently connect employers with the most relevant candidates.

- The suite will offer 'instant recommendations' to employers based on active job listings.

Like its predecessor, the internet, artificial intelligence has become the latest technology to take the world by storm. In these early stages of generative AI, companies have been pushing for greater application and more integration for months, in everything from internet searches to email writing.

The latest integration the tech sector has dreamed up is a marriage between generative AI and the exhaustive trudge that is the job hunt.

Digital-recruitment platform Indeed (RCRUY) on Tuesday announced Smart Sourcing, a suite of generative AI-powered tools designed to make job searching easier and more efficient for all parties involved.

Related: A whole new world: Cybersecurity expert calls out the breaking of online trust

For job seekers: Update to Indeed Profile

The Smart Sourcing launch comes in hand with an update to Indeed Profile, which, according to the company, is meant to help job seekers better represent themselves to potential employers.

A new update will enable users to make themselves visible to and available to outreach from employers. The AI offerings include an AI-powered writing tool to describe peoples' work experience on their Indeed Profiles.

Indeed did not specify when this feature would become available but said it was coming soon.

For employers: a number of new features

The bulk of the new features, however, are coming to employers.

Smart Sourcing enables employers to receive instant recommendations for the most relevant candidates for any active job listings. It specifically surfaces people who are actively seeking jobs and have been active on Indeed within the past 30 days.

These recommendations include AI-generated candidate summaries, which explain whether a candidate meets given qualifications and if they might be a good fit for a given role. Indeed

The platform then enables employers to send AI-generated messages to their favorite candidates, meant to speed up the interviewing process.

Indeed said that 92% of the employers already using the platform said it was their "preferred product for finding active candidates." Indeed did not disclose how many employers are already using Smart Sourcing.

Indeed Chief Executive Chris Hyams said in a statement that hiring remains a "challenging and inefficient" process. He hopes that these new AI-powered tools will "transform" the job-search market, he said.

Related: The ethics of artificial intelligence: A path toward responsible AI

Algorithmic discrimination: a major concern

This latest push to integrate AI-powered decision-making technology into yet more verticals comes amid ongoing and unanswered concern about algorithmic bias, discrimination and hallucination.

Hallucinations, as AI researcher and cognitive scientist Gary Marcus has regularly pointed out, occur when the output of a generative AI application is confidently wrong. Hallucinations, according to Marcus, are an unavoidable hallmark of the large language model paradigm.

At the same time, such models have become well-known for exhibiting bias and discrimination.

Bloomberg conducted a study last year of text-to-image model Stability Diffusion, finding that "the world according to Stable Diffusion is run by white male CEOs. Women are rarely doctors, lawyers or judges. Men with dark skin commit crimes, while women with dark skin flip burgers."

Numerous studies have pointed out a similar issue, with one finding that the output of three popular text-to-image generators "consistently under-represent(ed) marginalized identities."

The issue is not just with image generators; several other recent studies have highlighted problems of bias inherent to the large-language-model architecture. The problem derives largely from unfair or biased training data, something that's difficult to address when the internet at large is the data source in question.

"It is clear that these powerful computational tools, if not diligently designed and audited, have the potential to perpetuate and even amplify existing biases, particularly those related to race, gender and other societal constructs," a 2023 paper states.

A 2024 Unesco study found that such bias disproportionately affects women and girls, saying specifically that the bias has been perpetuated in the AI-assisted job-recruitment process.

The study states that LLMs can "reinforce stereotypes, biases and violence" against women and girls through discriminatory AI-powered decision-making, such as an AI recruitment tool discounting a qualified female candidate.

Related: OpenAI board member has a scary prediction for the future of work

Indeed and ethical AI: a Responsible-AI team

Asked at a news conference Monday about such issues, Raj Mukherjee, an executive vice president at Indeed, said that AI has the power to "transform society but also can be harnessed to harm people."

He cited Indeed's responsible-AI team, which he said works to make the product "more fair and less biased."

He did not explain how Indeed achieves this.

"It's not that we are making it so obscure why someone has been a matched candidate for a particular role," Maggie Hulce, an executive vice president at Indeed, added. "We're being really clear on what are the dimensions that seem to be well-aligned."

The executives did not specify how Indeed is ensuring that the Smart Sourcing tech is fair and unbiased. Indeed's head of responsible AI, Trey Causey, told TheStreet that no system can ever be completely unbiased. Causey did not immediately respond to a request for comment for elaboration on this point.

Causey did say that Indeed uses a "combination of qualitative and quantitative data to evaluate those systems’ performance along a number of dimensions, including fairness. Users are provided with mechanisms to provide feedback about GenAI outputs and we use that feedback to continually improve our systems."

Contact Ian with tips and AI stories via email, [email protected], or Signal 732-804-1223.

What's Your Reaction?