Can AI ‘feel’ guilt?

Research based on game theory suggests if we program AI agents with a sense of guilt, they could behave more cooperatively, much like humans do.

Synthetic intelligence that ‘feels’ guilt may per chance lead to more cooperation

Rising a sense of guilt helps social networks cooperate, new analysis suggests.

Peter Dazeley/Getty Photos

Some sci-fi cases depict robots as cold-hearted clankers desperate to govern human stooges. However that’s no longer the correct likely direction for synthetic intelligence.

Other folks own developed emotions love madden, disappointment and gratitude to abet us snarl, own interaction and invent mutual believe. Developed AI may per chance build the an identical. In populations of straightforward map agents (love characters in “The Sims” however well-known, well-known less complicated), having “guilt” on the total is an exact approach that advantages them and increases cooperation, researchers tale July 30 in Journal of the Royal Society Interface.

Emotions are no longer correct subjective feelings however bundles of cognitive biases, physiological responses and behavioral developments. After we damage someone, we veritably in point of truth feel compelled to pay a penance, most seemingly as a signal to others that we obtained’t offend again. This drive for self-punishment may be known as guilt, and it’s how the researchers programmed it into their agents. The quiz used to be whether folks that had it'd be outcompeted by folks that didn’t, mumble Theodor Cimpeanu, a computer scientist on the College of Stirling in Scotland, and colleagues.

The agents played a two-player game with their neighbors known as iterated prisoner’s quandary. The sport has roots in game thought, a mathematical framework for examining a pair of resolution makers’ decisions based on their preferences and particular person solutions. On every flip, every player “cooperates” (performs nice) or “defects” (acts selfishly). In the quick term, you receive potentially the most sides by defecting, however that tends to invent your accomplice open defecting, so everyone appears to be better off cooperating in some unspecified time in the future. The AI agents couldn’t in point of truth feel guilt as richly as humans build however skilled it as a self-imposed penalty that nudges them to cooperate after selfish conduct.

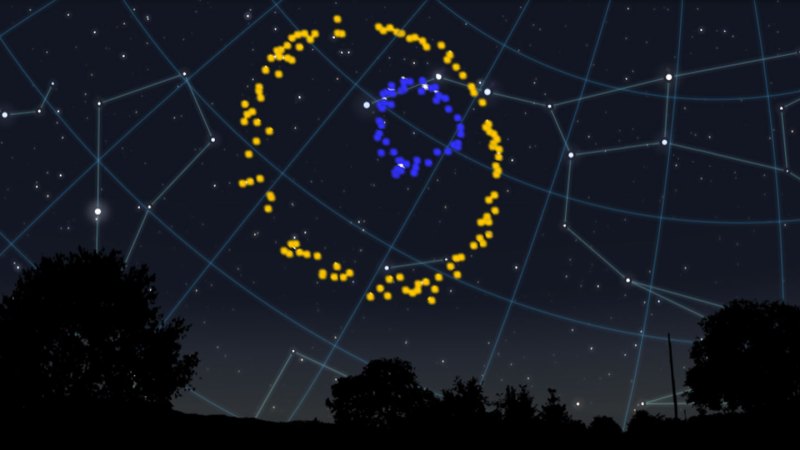

The researchers ran several simulations with utterly different settings and social community constructions. In every, the 900 avid gamers had been every assigned one of six solutions defining their tendency to defect and to with out a doubt feel and answer to guilt. In a single approach, nicknamed DGCS for technical causes, the agent felt guilt after defecting, which design that it gave up sides till it cooperated again. Seriously, the AI agent felt guilt (lost sides) best if it purchased knowledge that its accomplice used to be also paying a guilt stamp after defecting. This steer clear off the agent from being a patsy, thus imposing cooperation in others. (In the exact world, seeing guilt in others may be tense, however costly apologies are model.)

The simulations didn’t model how guiltlike conduct may per chance first emerge — best whether it may in all probability per chance continue to exist and spread once supplied. After every flip, agents may per chance copy a neighbor’s approach, with a chance of imitation based on neighbors’ cumulative receive. In lots of cases — particularly when guilt used to be reasonably low-payment and agents interacted with best their neighbors — DGCS grew to develop to be the dominant approach, and most interactions grew to develop to be cooperative, the researchers found.

Lets desire to program the ability for guilt or other emotions into AIs. “Presumably it’s more straightforward to believe whenever you've a sense that the agent also thinks within the an identical formulation that you watched,” Cimpeanu says. We may peek emotions — no longer no longer as much as the helpful sides, despite the incontrovertible fact that no longer the acutely aware ones — emerge on their very own in teams of AIs within the event that they'll mutate or self-program, he says. As AIs proliferate, they'd per chance comprehend the cold logic to human warmth.

However there are caveats, says Sarita Rosenstock, a thinker at The College of Melbourne in Australia who used to be no longer piquant about the work however has ragged game thought to behold guilt’s evolution. First, simulations embody many assumptions, so one can’t map precise conclusions from a single behold. However this paper contributes “an exploration of the chance space,” highlighting areas where guilt is and is rarely always sustainable, she says.

2nd, it’s laborious to map simulations love these to the exact world. What counts as a verifiable payment for an AI, along with paying valid money from a coffer? Must you recount to a smartly-liked-day chatbot, she says, “it’s continuously free for it to mumble I’m sorry.” Without a transparency into its innards, a misaligned AI may per chance feign remorse, best to trespass again.

More Reports from Science News on Synthetic Intelligence

What's Your Reaction?