Nvidia makes its biggest purchase ever

According to CNBC, Nvidia has agreed to pay about $20 billion in cash for Groq, a specialist in AI accelerator chips used to power inference—the stage where trained AI models actually answer questions, generate text, or drive real-time applications. Alex Davis, CEO of investment firm Disruptive, ...

According to CNBC, Nvidia has agreed to pay about $20 billion in cash for Groq, a specialist in AI accelerator chips used to power inference—the stage where trained AI models actually answer questions, generate text, or drive real-time applications. Alex Davis, CEO of investment firm Disruptive, which led Groq’s latest financing round, told CNBC that the deal came together quickly, months after Groq raised $750 million at a valuation of roughly $6.9 billion.

Disruptive has invested more than $500 million in Groq since its founding in 2016, and Groq is now expected to notify investors of the Nvidia acquisition as details are finalized. The acquisition reportedly includes Groq’s core chip design and related assets, while excluding the company’s early-stage Groq Cloud business, which has been offering developers API-based access to its hardware.

From Nvidia’s side, the financial capacity is clear. The chip giant ended October with around $60.6 billion in cash and short-term investments, up sharply from roughly $13.3 billion at the start of 2023, powered by an explosion in demand for its AI GPUs. An all-cash structure means no dilution for existing shareholders, but it also telegraphs Nvidia’s conviction that securing Groq’s technology will pay off over the long term, even at a steep premium.

Why this is Nvidia’s biggest acquisition ever

This Groq deal instantly becomes Nvidia’s largest acquisition by total value, surpassing its $6.9 billion purchase of Israeli networking company Mellanox in 2019. Mellanox gave Nvidia high-speed networking and interconnect technology that became crucial to building out large AI clusters, effectively turning Nvidia from a GPU vendor into a full-stack data center platform provider. Shutterst

Nvidia has tried to go even bigger before. In 2020, it announced plans to buy British chip designer Arm from SoftBank for a combination of cash and stock worth up to $40 billion, a move that would have reshaped the global semiconductor landscape. That deal collapsed under regulatory pressure in 2022, after authorities in the U.K., European Union, and U.S. raised concerns that Nvidia could gain too much influence over licensing of Arm’s CPU designs.

More Nvidia:

- Nvidia’s China chip problem isn’t what most investors think

- Jim Cramer issues blunt 5-word verdict on Nvidia stock

- This is how Nvidia keeps customers from switching

- Bank of America makes a surprise call on Nvidia-backed stock

The Groq acquisition is smaller in dollar terms than the failed Arm attempt but is still huge for a single-technology target. By spending nearly three times Groq’s last valuation, Nvidia is signaling that inference hardware is not just a side business but a core pillar of where it wants AI revenue to grow next.

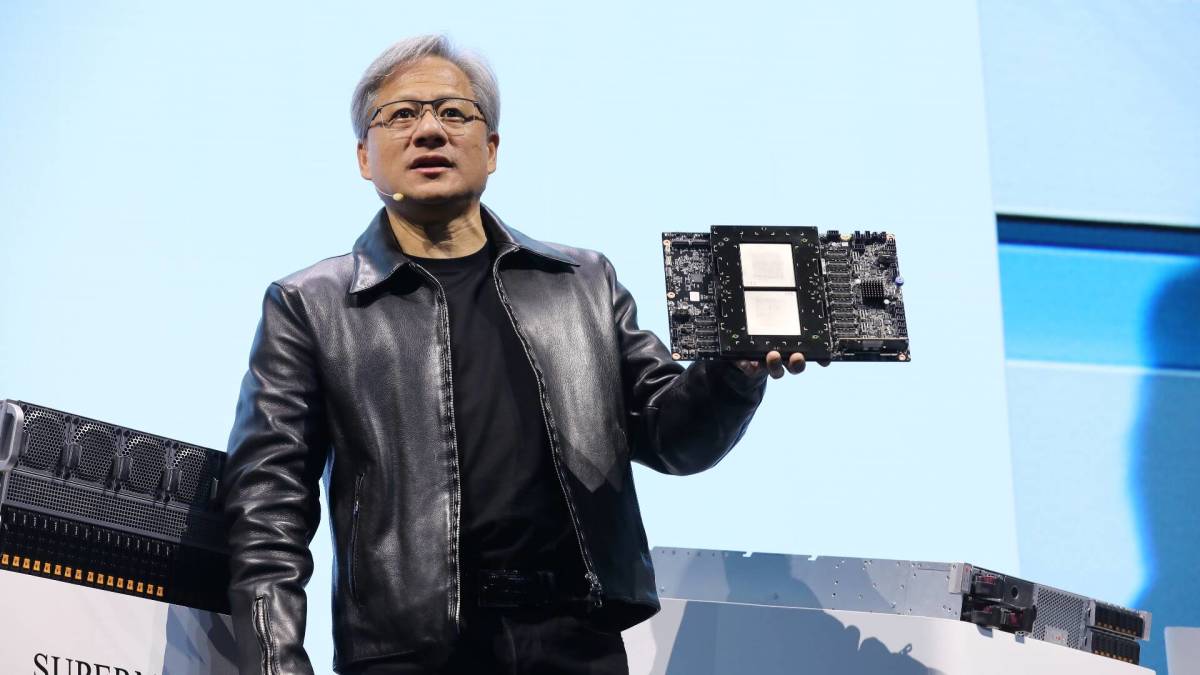

The AI inference race heats up

The AI chip market has two big buckets: training and inference. Training is where massive models are built, often using thousands of Nvidia GPUs in data centers run by companies like Microsoft, Amazon, and Google. Inference is where those models are actually used for search, chatbots, copilots, AI video, and any application that needs fast, repeated responses at scale.

Groq has positioned itself as a pure-play inference specialist. Analyst-focused coverage from outlets like AInvest says Groq’s hardware can deliver extremely low-latency performance, with some marketing pointing to speeds up to twice that of rival systems on select workloads while maintaining accuracy. That performance promise, along with a simplified programming model, made Groq an attractive option for developers who wanted something faster and more predictable than general-purpose GPUs for production workloads.

Related: Controversial Nvidia rival may finally IPO

Regulatory scrutiny and antitrust risks

Because Nvidia already sits at the center of the AI hardware world, any major deal it does is going to raise eyebrows in Washington, Brussels, London, and Beijing. Reuters notes that regulators have closely watched Nvidia’s expanding role in AI compute, and the Groq deal is expected to face antitrust review in multiple jurisdictions.

The key questions will likely center on whether acquiring Groq significantly reduces competition in AI inference hardware and whether Nvidia could use control over Groq’s chips to disadvantage rivals or foreclose alternatives for cloud providers and enterprises. Nvidia has argued in past transactions that integrating acquired technologies into its stack benefits customers by improving performance and innovation, but regulators have become more sensitive to vertical consolidation in critical digital infrastructure.

For investors, the risk is not only that regulators could block the transaction (as happened with Arm) but also that they might impose remedies. Those could include requirements around licensing, interoperability, or access to Groq’s technology for third parties at fair terms, which might reduce some of the strategic upside Nvidia is paying for.

What it means for investors and everyday savers

If you own Nvidia, this acquisition tells you a few important things about where the company sees the puck going.

- First, Nvidia expects AI demand to shift from a build-out phase, where companies scramble to train models, into a deployment phase where inference workloads explode across industries and devices. Owning specialized inference technology becomes a way to capture that second wave of spending and to keep customers from defecting to rivals that pitch cheaper or more efficient hardware for production use.

- Second, Nvidia is leaning into an ecosystem strategy rather than a product-only strategy. By combining GPUs, networking (from Mellanox), software (CUDA and related libraries), and specialized accelerators (Groq’s chips), Nvidia can offer end-to-end solutions that are harder for competitors like AMD, Intel, or cloud providers’ custom chips to displace. For the average investor, that looks like a moat—but it also concentrates risk in one company.

- Third, consolidation at the chip level can eventually show up in your wallet. If Nvidia can use Groq’s technology to make inference cheaper and more efficient, that could lower the cost for startups and enterprises to deliver AI services, which in turn could mean more competition and better tools for consumers and small investors. But if fewer independent chip options lead to higher prices or stricter vendor lock-in, cloud and software providers may pass on higher infrastructure costs, and some innovation could get squeezed out at the edges.

For long-term savers, the practical takeaway is that AI infrastructure is becoming a core part of the market story, not just a tech side plot. Nvidia’s willingness to spend $20 billion in cash on Groq reinforces the idea that controlling compute is like owning the toll road on the AI superhighway.

Related: Nvidia’s $4 trillion moment came with a quiet warning sign

What's Your Reaction?