Nvidia CEO pours cold water on the AI power debate

Nvidia (NVDA) CEO Jensen Huang just swung a sledgehammer at the idea that AI will permanently cripple the power grid. On the latest episode of the Joe Rogan Experience, Huang argued the exact opposite, that Nvidia’s unfathomable computing gains have pushed performance per watt so far ahead that ...

Nvidia (NVDA) CEO Jensen Huang just swung a sledgehammer at the idea that AI will permanently cripple the power grid.

On the latest episode of the Joe Rogan Experience, Huang argued the exact opposite, that Nvidia’s unfathomable computing gains have pushed performance per watt so far ahead that AI’s long-term energy footprint might become “utterly minuscule.”

A decade of 100,000 times efficiency improvements, he argues, rewrites the entire debate.

Consequently, the focus will then be on scale.

If AI becomes dramatically cheaper to run, it will spread everywhere, and then the real challenge is building the industrial base to back it all up.

The U.S., in particular, benefited from years of relatively cheap energy, supercharged by earlier pro-drilling policies, but Huang framed that as context, not politics.

He believes that energy growth powers industrial growth, which in turn facilitates job growth.

For investors, that translates into a broad-based U.S. capex cycle spread across power, electrical equipment, construction, and Nvidia systems that enable AI economics. Photo by ANDREW CABALLERO-REYNOLDS on Getty Images

Nvidia’s CEO thinks AI’s energy problem is overhyped

On Joe Rogan's podcast, Huang effectively rewrote the AI energy debate.

More Nvidia:

- Goldman Sachs issues Micron prediction ahead of earnings

- Is Nvidia’s AI boom already priced in? Oppenheimer doesn’t think so

- Investors hope good news from Nvidia gives the rally more life

- AMD flips the script on Nvidia with bold new vision

Though Nvidia’s CEO acknowledged AI’s energy constraints, he said those would fade away, courtesy of, you guessed it, Nvidia.

He argues that Nvidia’s accelerated computing ultimately delivered a whopping 100,000x performance gain for computing in the past decade.

Related: Morgan Stanley reveals eye-popping price target on Nvidia stock

In his framing, classic Moore’s Law continues to make computing cheaper every year, and AI-powered computing is essentially Moore’s Law “on energy drinks”.

On Rogan, Huang said that,

From an investor's lens, that means that,

- If AI remains energy-constrained, the platform delivering the most superior performance per watt wins; Huang tells us that’s Nvidia’s stack.

- That naturally feeds structural demand for DGX systems and Nvidia’s robust GPUs.

- Additionally, it makes the case for durable pricing power, as hyperscalers and governments may be willing to pay more for Nvidia to keep power bills and capex in check.

AI’s real bottleneck is energy

Contrary to what everyone believes, AI’s real problem isn’t imagination, but it’s electricity.

Huang calls AI “energy-constrained,” and many in the tech fraternity agree with that notion. Microsoft CEO Satya Nadella recently warned that the next limit on AI isn’t GPUs but grid capacity.

Similarly, OpenAI CEO Sam Altman says AI and energy have effectively “merged into one,” with Tesla CEO Musk literally pairing his xAI supercomputer plans (Colossus) with power-plant-scale infrastructure. T

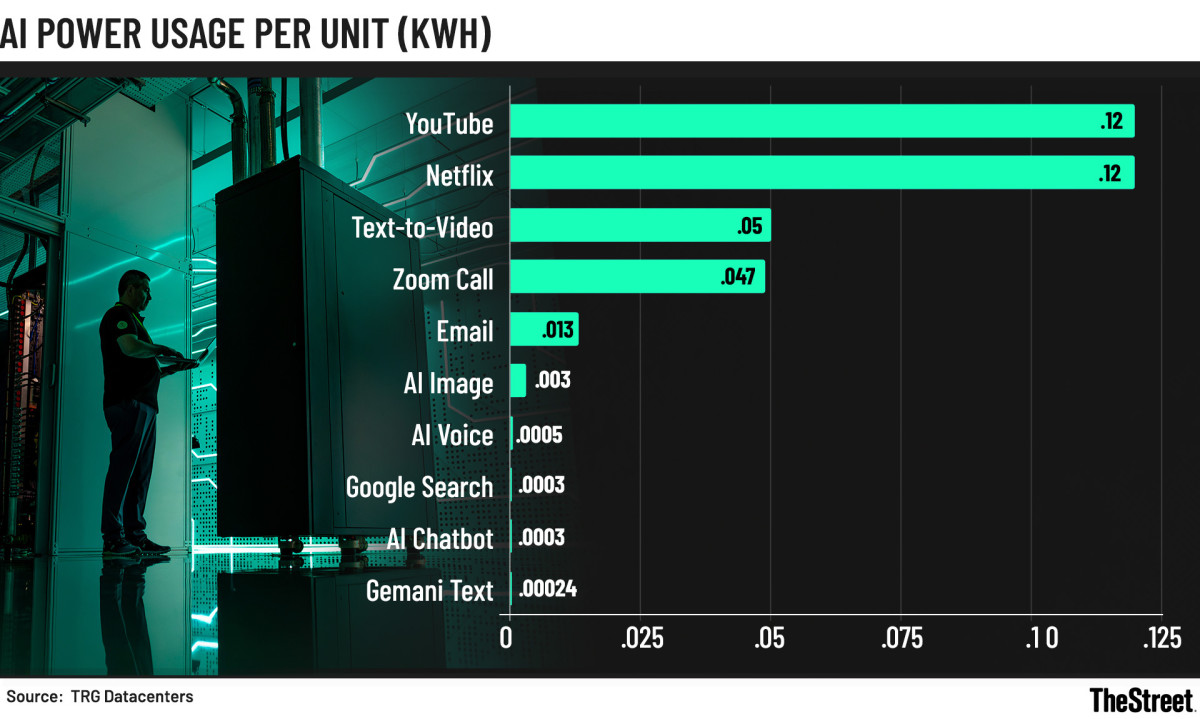

The carbon math backs those claims up:

- Tech sector emissions:900 million tons of CO₂ last year, on track for 1.2 billion tons by 2025, per TRG Datacenters.

- Data-centre load: Global DC power usage could double by 2026, driven primarily by AI.

- Everyday digital reality:

1 hour of streaming: 42g CO₂ 1 hour of Zoom: 17g CO₂ Short AI video: similar to a Zoom hour AI image: 1g CO₂, 10-times a ChatGPT text query

Similarly, in the U.S., the EPRI projects data centres may need an additional 50 GW of generation by 2030.

For perspective, that would be the order of dozens of fresh plants.

Tech behemoths like Amazon, Google, and Meta have collectively signed up to triple global nuclear capacity by 2050, and that’s just the start of how the energy landscape evolves over the next few years.

Nvidia’s “30 days from failure” culture

Nvidia’s unbeatable moat isn’t just in the chips it’s dishing out, but the way Huang runs the place.

On Rogan, he shocked everyone by describing a work culture built around near-failure and the willingness to invest billions in ideas that aren’t necessarily viable at the time, but could rewrite the industry.

Related: Bank of America unveils surprise 2026 stock-market forecast

“We were 30 days from going out of business more times than I can count,” Huang says, and he means it.

The CUDA platform is the cleanest example.

The decision to develop a proprietary programming model doubled chip costs while crushing Nvidia’s healthy margins at the time.

However, the company’s belief in “GPU + parallel programming” eventually became the backbone of modern AI.

- Software lock-in: Major AI frameworks, including PyTorch, TensorFlow, and JAX, are initially optimized and arguably the best for CUDA GPUs.

- Market share: Nvidia dominates the AI accelerator market, boasting a market share in the 70–80% range in terms of sales.

- Money proof: Nvidia’s Data Center revenue is now tens of billions annually ($57 billion in Q3 alone), spearheaded by its robust AI GPU demand (H100, H200, Blackwell).

The story behind the DGX supercomputer is virtually the same.

Nvidia spent years and billions building a supercomputer it could hardly sell until its 2016 deliveries to OpenAI and Elon Musk unlocked the market.

According to Huang, it cost $300,000 per box, and so that first unit was incredibly expensive to make, but financially tiny.

However, in both cases, the strategic importance is far bigger than the dollar value. The two lighthouse wins essentially paved the way for multi-billion–dollar follow-on demand.

Related: Major Wall Street bank drops jaw-dropping Oracle stock price target

What's Your Reaction?